Brooklyn-based Volvox Labs (VVOX) tapped into the disguise Extended Reality (xR) workflow with Unreal Engine to create a stunning visual experience for Dubfire’s recent live streamed concert from The Met Cloisters Museum in New York City.

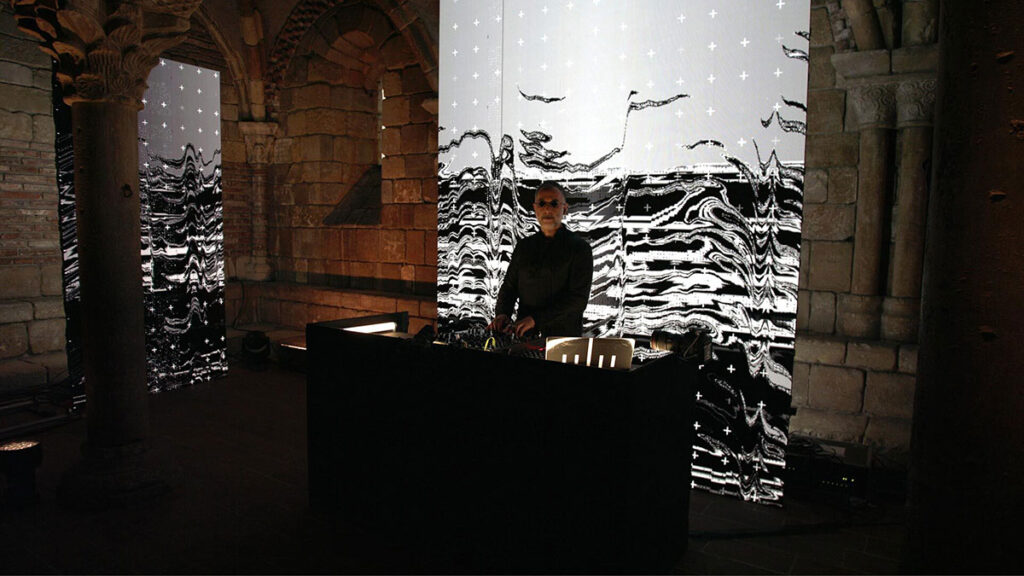

Part of the Sonic Cloisters virtual electronic music series, which explores the parallels between techno and the art of The Middle Ages, Dubfire’s xR performance was the first time that the Cloisters had undergone such a digitally-enhanced display of its artwork.

“It was amazing to be able to take this project into such a unique location for one day and basically launch a full xR experience without any kind of rehearsal,” explains Kamil Nawratil, Creative Director at VVOX. “We would never have thought of delivering this kind of project without the disguise and Unreal Engine workflows. The creative possibilities, the photorealism of the content and the stability of the system were unbelievable.”

VVOX had been delving deep into xR with its recent investment in a disguise vx 2 media server and rx render node, so delivering Dubfire’s livestream in xR was a “natural choice” for its team. “You could say that everything led up to this point. We learned about RenderStream and how to use it to plug Unreal Engine into disguise a while ago; we also have an LED volume here in our studio that helped us learn the workflow. But for this scenario we decided to use the actual architecture of the venue and mix it into the set design. It was a huge opportunity to evolve xR into something different.”

Dubfire’s livestream originated from the beautiful 12th century Pontaut Chapter House in the Cloisters, a meeting place for monks formerly part of an abbey in Pontaut, France. To complement that, VVOX developed a set design as equally driven by the look and feel of the medieval location as by the techno tempo of the music. The design faded in and out of five different Unreal Engine worlds or ‘chapters’. Between each world were three to five minutes of live camera footage of the artist in the venue.

“The big trick was essentially having an exact replica of the room in Unreal Engine,” says Nawratil. “We did an architectural scan of the room and, with the site tracking and spatial calibration in disguise, it all snapped into place.”

VVOX had just two weeks to build a full playback system without rehearsing with Dubfire to ensure it would work on site. The team also had to run some tests with the stYpe camera tracking system using floor markers. Although VVOX knew they had a handle on everything in the studio, they understood that virtual environments were completely different, and they were integrating physical environments into the shoot as well.

The disguise virtual zoom feature came in handy during the process. “The room we were shooting in was 30 feet wide by maybe 20 feet deep. So the virtual zoom was a powerful tool that allowed us to build these additional worlds outside of the virtual room or even give the space a little more breathing room.”

Having the venue in Unreal Engine gave VVOX the ability to build different objects that would correspond to the real world, such as AR graphics wrapping around two columns in the center of the room. “Because we couldn’t set down an LED floor, we had to obscure the table and the gear the artist was playing on with an AR element so the set extension made visual sense,” he explains.

“We were so pleased to essentially take xR out of xR, in the sense of leaving the volume that we’re used to working in and moving to a live setting – still doing what xR is supposed to do but also taking advantage of the architecture of a very unique space,” Nawratil concludes.